Ethics in Tech — Intro

After joining Meta, I felt responsible for my work, so I started a study group with friends for a weekly discussion on ethics in big tech. My exploration contains three levels.

- Humanity level: My relationship with technology

- Company level: My value alignment with Meta’s value

- Individual-level: My trust in the Meta leadership

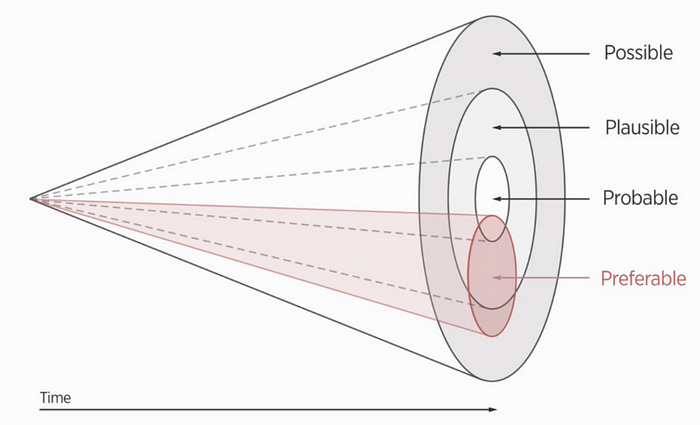

On the first level, the humanity level, I want to figure out my boundary with technology, understand what technology can achieve, its secondary effects, and my morality boundary when it changes the social trajectory. Utopia and dystopia are usually extreme versions of the future exaggerated by the media. And we need to distinguish between probable, possible, and preferable futures.

Most dystopia shows broadcast a “possible future,” and they are taking advantage of our fear to blindly go against technology rather than critically evaluating the future we want and how we can improve the current status. “XXX is evil,” ends the discussion while truly understanding the complexity of society and gives us nuances to find improvement and imagine alternatives. The main questions I will ask at this level, including

What’s my ethical boundary with technology? To what extent do I believe it’s good or bad, and why?

What’s the alternative future we want to see if I don’t agree with the current technology?

On the second level, the company level, I will use my principles in the first level to evaluate the actions of Meta. Values drive choices, and choices guides actions. Though I love my work in Meta, I want to know Meta’s viewpoint when dealing with public critiques and their standpoints when secondary effects, such as fake news and mental health issues, negatively affect society. Here, some questions include

Does a company(or every human being) have the obligation to improve the society?

If yes, how to define improvement?

As we see, Facebook takes an extremely liberal stance, but if we look at the other side of the spectrum, China seems to be a good example. China started banning video games in China because it believes it’s harming teenagers. Is parentalism the way we want to operate our society? If not, where is the line in between them we want to sit on? What other options do we have?

On the third level, the individual level, I want to if I’m on board with the leadership with Meta. Even though one person’s opinion cannot represent the whole company, it’s an indicator of the company culture, what kind of people Meta attracts, and their viewpoint of the trade-off between profits and ethics.

What’s the general intention people have?

Even if they have good intentions, what are the principles and ethics in their work? Do I agree with them?

By the end of this summer, I want to eventually develop a set of my technology principles, apply them in the future jobs I select, and operate them in my daily work if I stay in the tech sector.